VeGaS: Video Gaussian Splatting

- E3DGSAR

- Computer vision , 3 dgs , Video

- January 1, 2025

Table of Contents

Abstract

Modern video representations often prioritize reconstruction quality and compression, but they can be difficult to edit in a precise and controllable way. VeGaS (Video Gaussian Splatting) tackles this gap by adapting Gaussian Splatting ideas to 2D videos while explicitly modeling nonlinear motion and appearance changes across time.

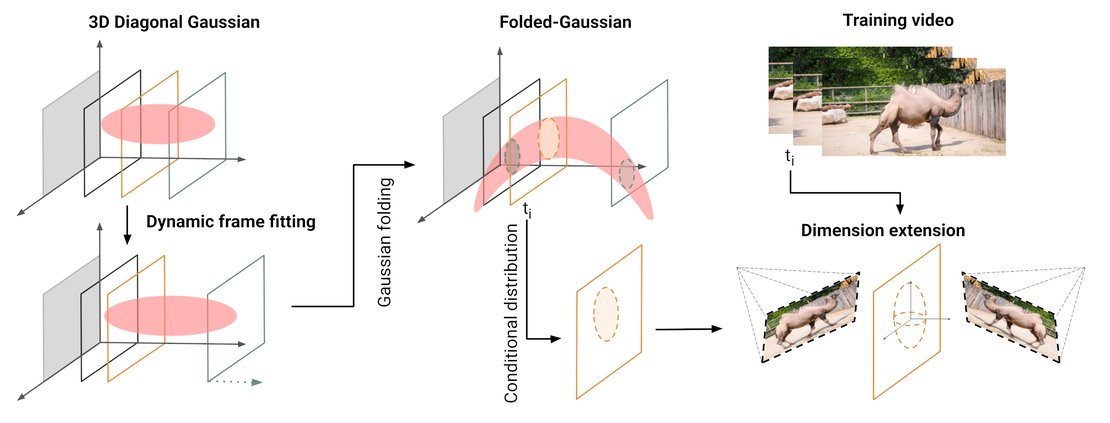

The core idea is to treat a video as a spatio-temporal field and represent it with Gaussians whose behavior over time can capture complex dynamics. To make this possible, the authors introduce Folded-Gaussians—a new family of distributions designed to model nonlinear structures in the video stream. By conditioning this spatio-temporal representation at chosen time points, VeGaS produces frame-specific Gaussian representations that support both high-quality reconstruction and richer, more flexible video modifications than earlier Gaussian-based video models.

Experiments reported by the authors show that VeGaS achieves stronger results on frame reconstruction benchmarks and enables edits that go beyond simple linear transformations—making it a practical step toward Gaussian-based, editable video pipelines.

Paper (ScienceDirect): https://doi.org/10.1016/j.ins.2025.123033

arXiv: https://arxiv.org/abs/2411.11024

Code: https://github.com/gmum/VeGaS

Project page: https://gmum.github.io/VeGaS/